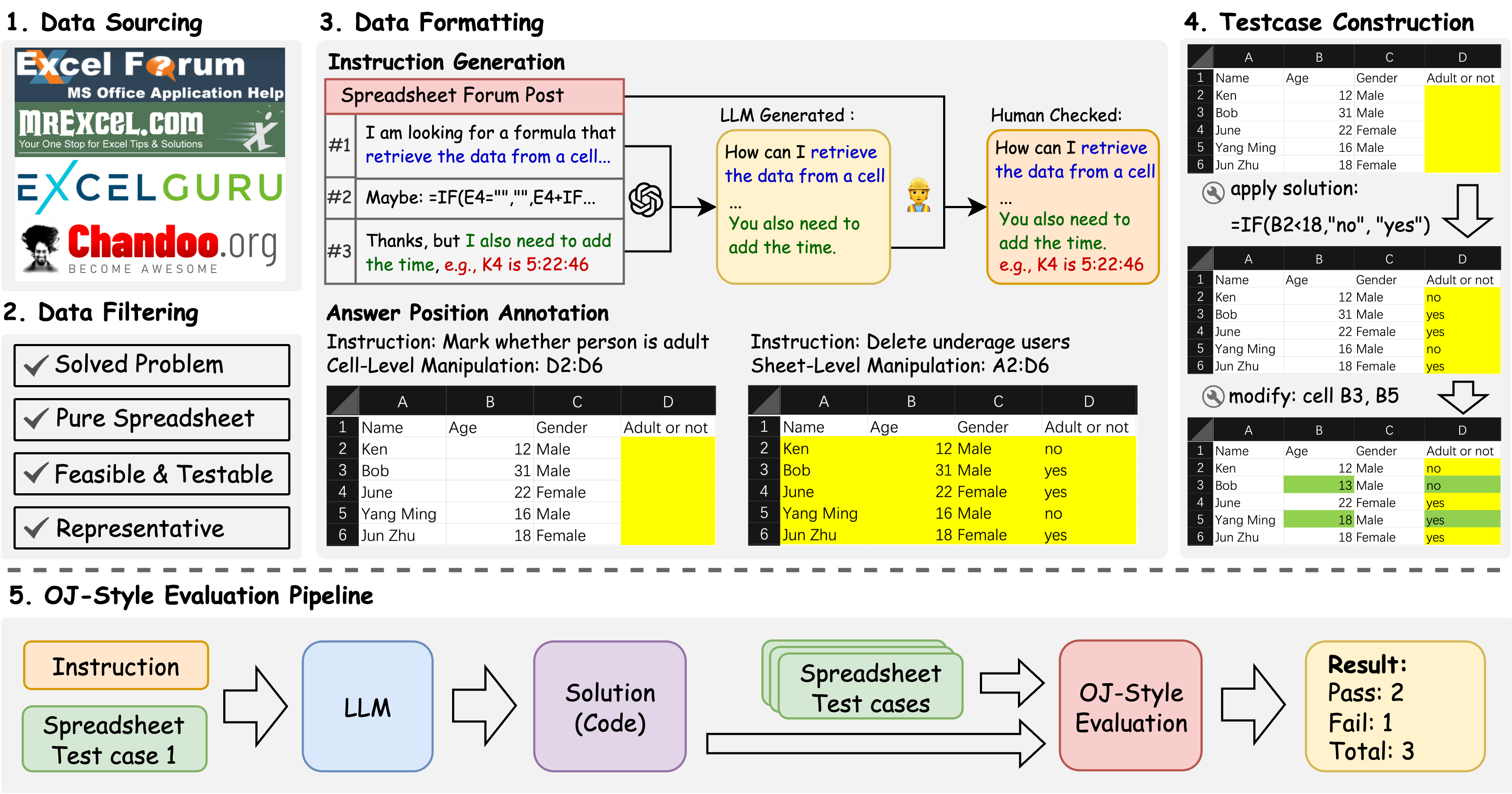

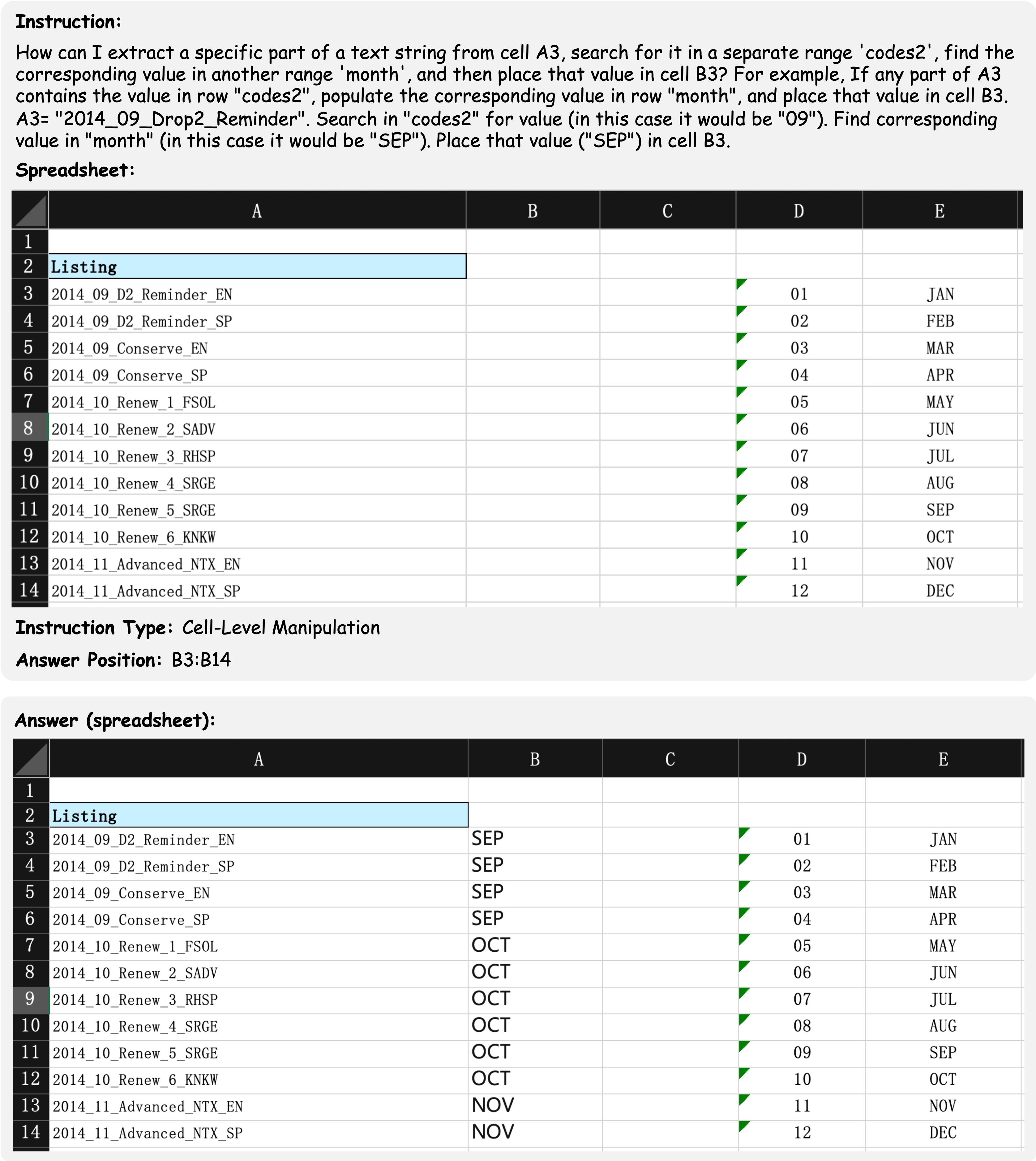

Example 1: A cell-level manipulation example question involves manipulating a non-standard relational table (missing header on column D and column E).

This example shows a cell-level manipulation data example which aims to extract a

specific part of a text string from a column of cells. The instruction contains

the demand of the user and an example manipulating action of the question, which

rarely occur in synthetic instructions of the previous benchmarks. Furthermore,

the table within the spreadsheet file is a non-standard relational table that

lacks a complete table header. The final result is required to be filled in the

cells from B3 to B14, which ensure the uniqueness of the answer.

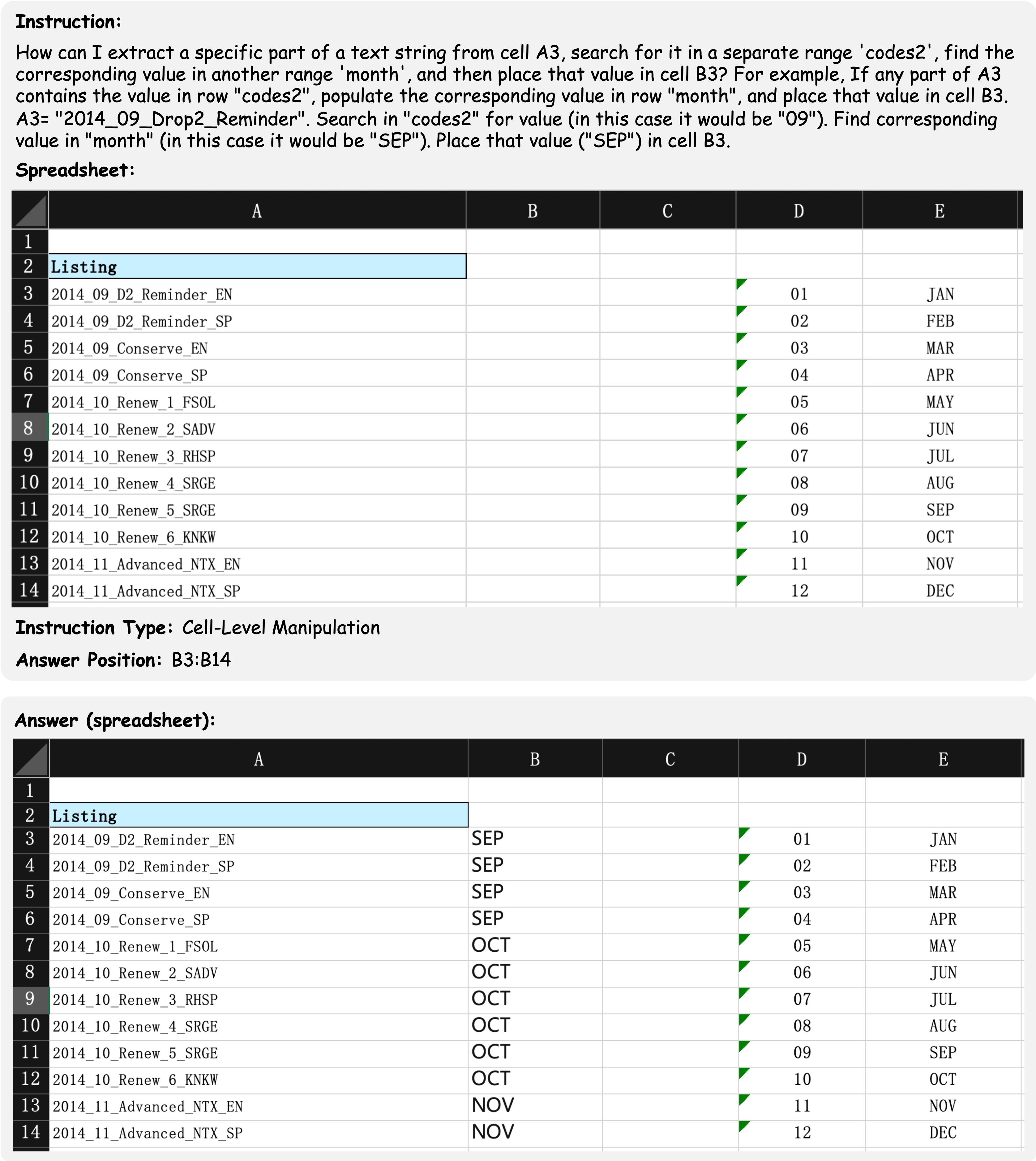

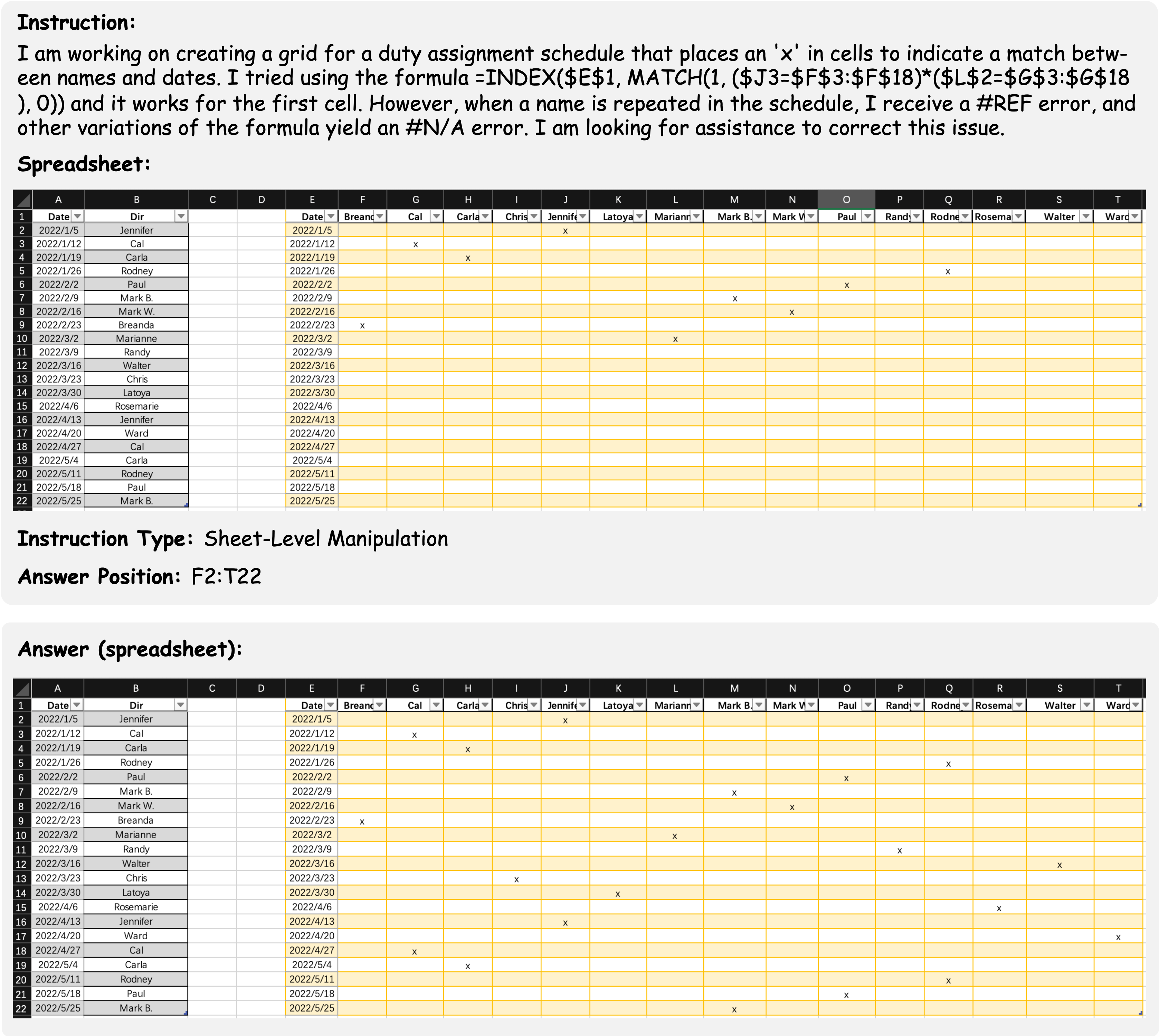

Example 2: A sheet-level manipulation example question involves manipulating multiple tables in one sheet (first table from A1 to B22; second table from E1 to T22).

This example shows a sheet-level manipulation data example aimed at creating a grid

for a duty assignment schedule. The instruction contains the demand of the user,

the current formula solution and the error encountered by the user. Besides, the

spreadsheet file contains two relational tables in one sheet. The final result

should be filled in a two dimensional position (i.e., F2 to T22).

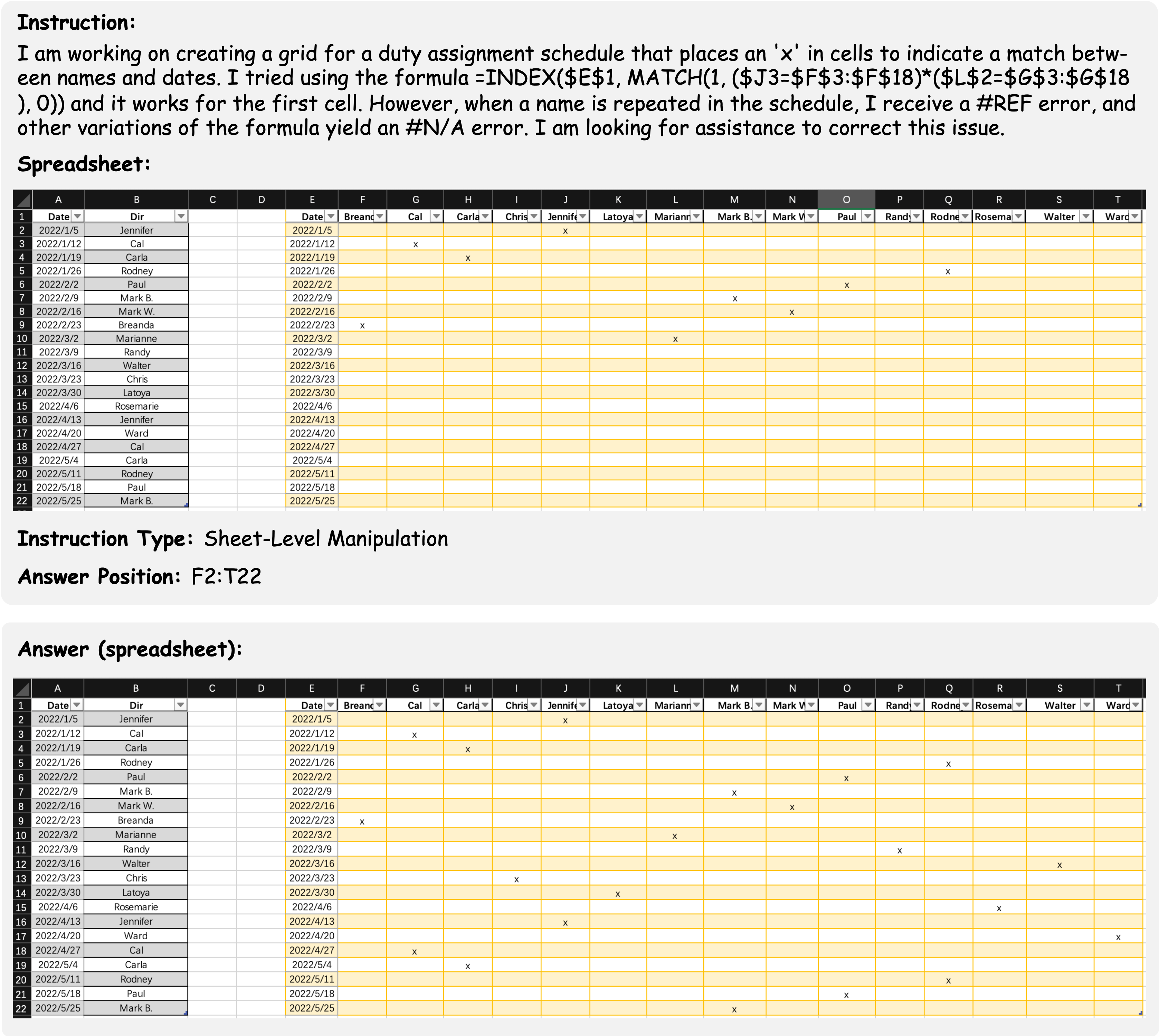

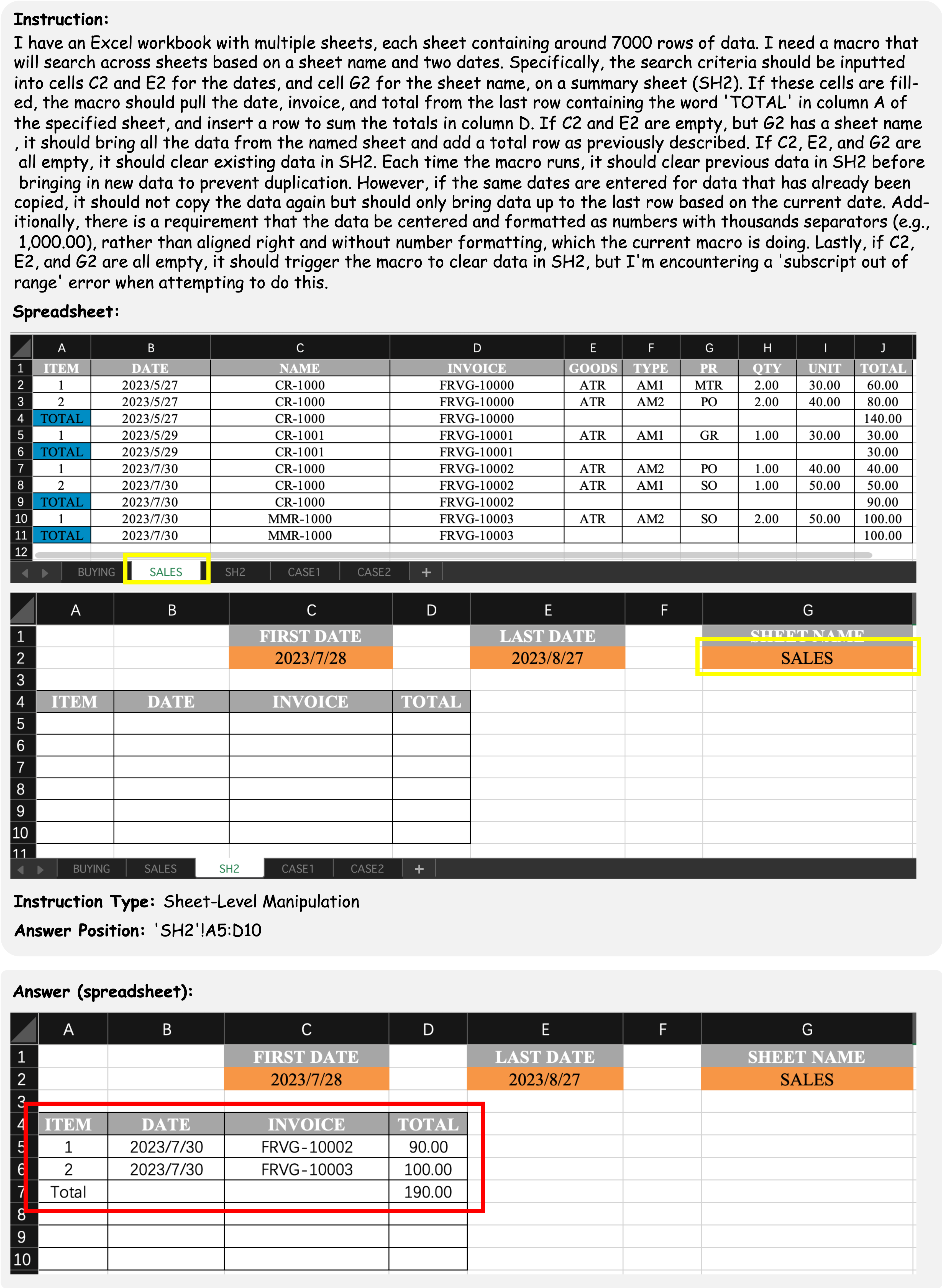

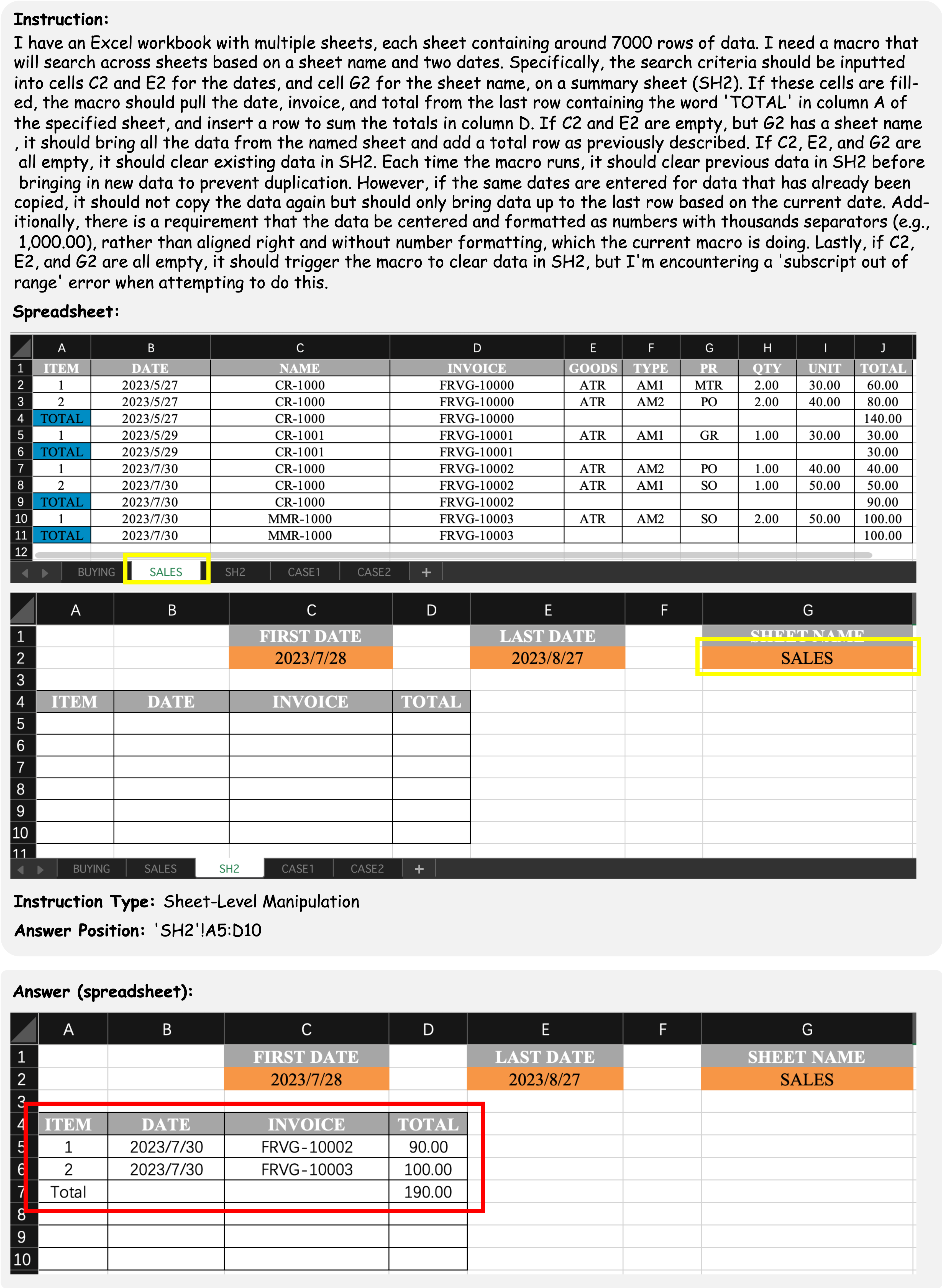

Example 3: A sheet-level manipulation example question involves manipulating multiple tables on multiple sheets.

This example shows a sheet-level manipulation data example aims to search across

sheets based on a sheet name and two dates (i.e., first date and last date). The

instruction contains a complex requirements, a detailed explanation, a manipulation

example and the error encountered by the user. Moreover, the spreadsheet file

contains multiple sheets, including the table to be manipulated (e.g., sheets named

BUYING or SALES) and examples of possible answers (e.g., sheets named CASE1 or CASE2).

The final result should be inserted into a two-dimensional location on the sheet named SH2.

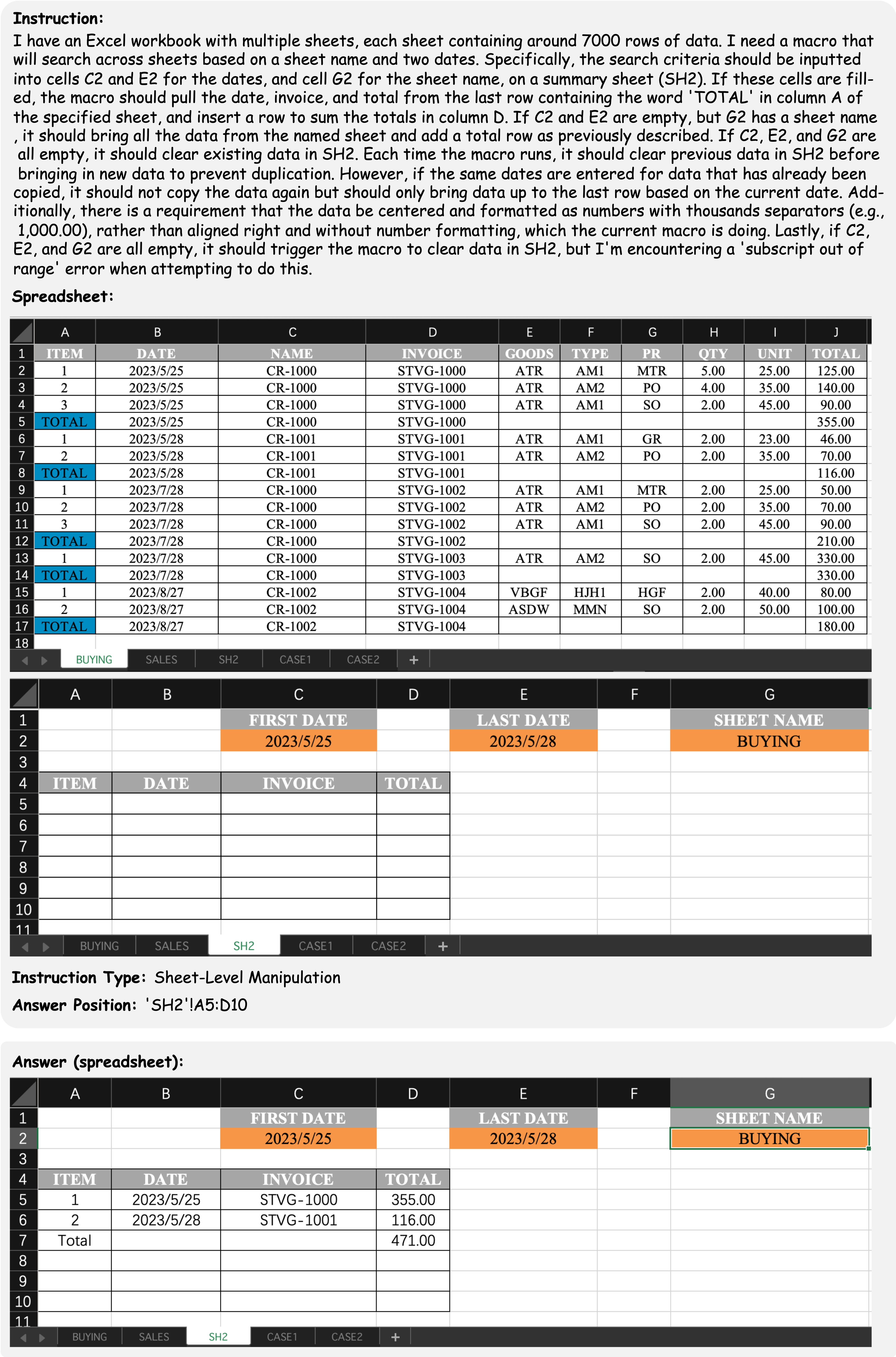

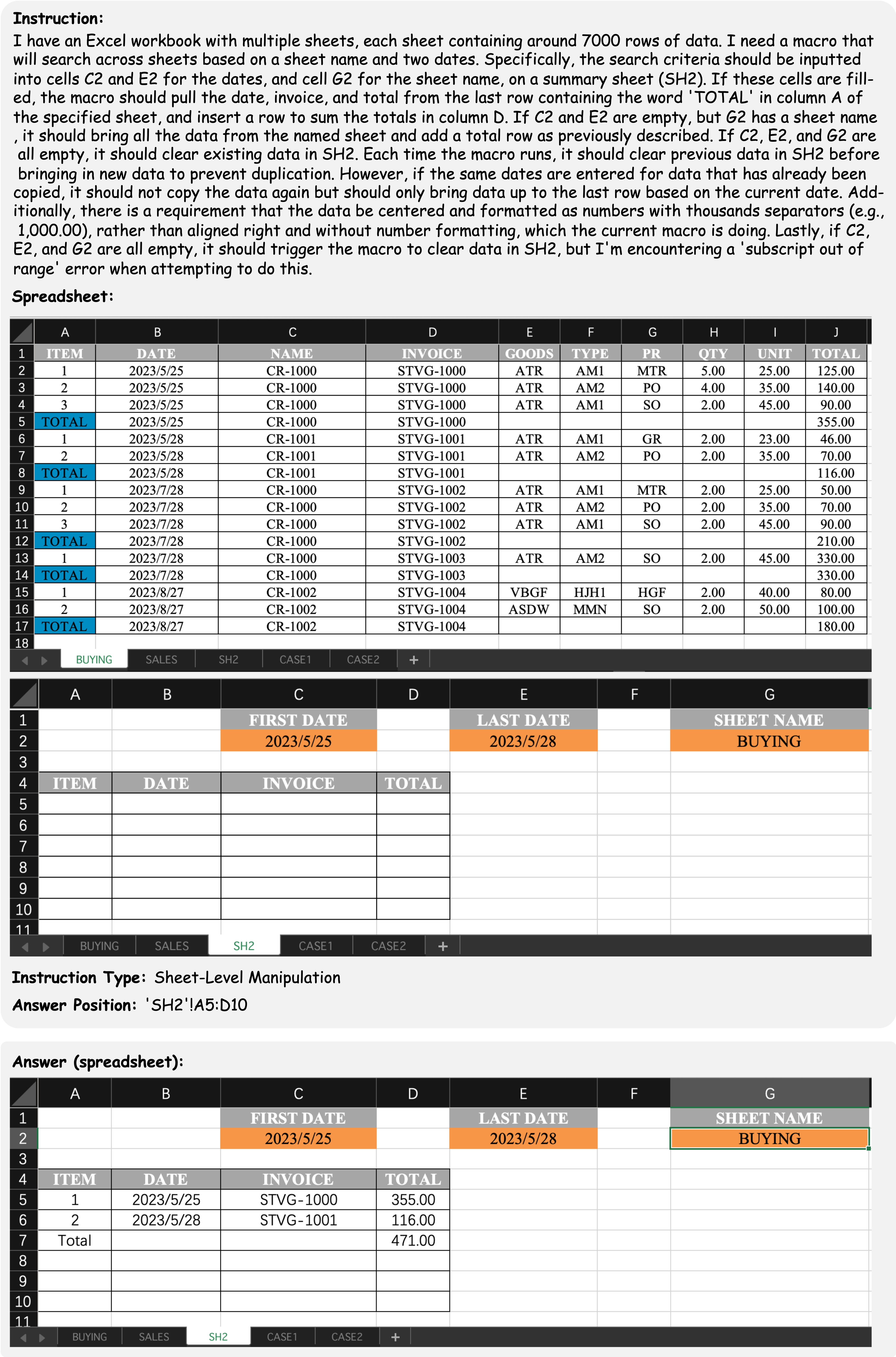

Example 4: A test case modified from the question in Example 3.

This example shows a test case modified from the question in Example 3. This test case is

constructed following the user's requirements in the instruction. The user expects to obtain

a result that will search within a specific sheet name (cell G2). Only the rows with a date

falling between the first date (cell C2) and last date (Cell E2) should be returned. Therefore,

we modify the value of the sheet name (cell G2) to construct a new test case, as highlighted in

yellow. This test case yields search results in the SALES sheet, rather than the original

case that searches in the BUYING sheet. The values in the answer position also change, as

highlighted in red. By modifying the sheet name, we can alter the sheet that is being searched,

enabling a more comprehensive assessment of the solution's effectiveness.